Artificial intelligence is quickly being adopted for more uses in Canadian businesses, but when AI makes a mistake, it’s the business and not the algorithm that pays the price. Across industries, companies are adopting automation faster than they’re preparing for its legal fallout.

In light of this rapidly evolving legal and regulatory landscape, our team at MyChoice, a leading insurtech company in Canada, decided to conduct a dedicated study to better understand where AI-related liability risk is concentrated across the Canadian economy.

“While AI adoption headlines often focus on innovation and productivity, much less attention has been paid to how exposure differs by industry, how customer-facing automation amplifies legal risk, and where existing laws and precedents are already being tested. Our goal was to move beyond anecdotes and, using a structured, weighted framework, identify which sectors are most vulnerable if AI systems fail, misinform, or discriminate,” says MyChoice COO, Mathew Roberts.

Our Methodology

To determine which Canadian industries are most exposed to AI-related liability, we evaluated factors indicating the rate and scope of AI adoption. We also considered the legal, financial, and regulatory impact of any incidents in each industry.

Ranking of Canada’s Highest-Risk Industries

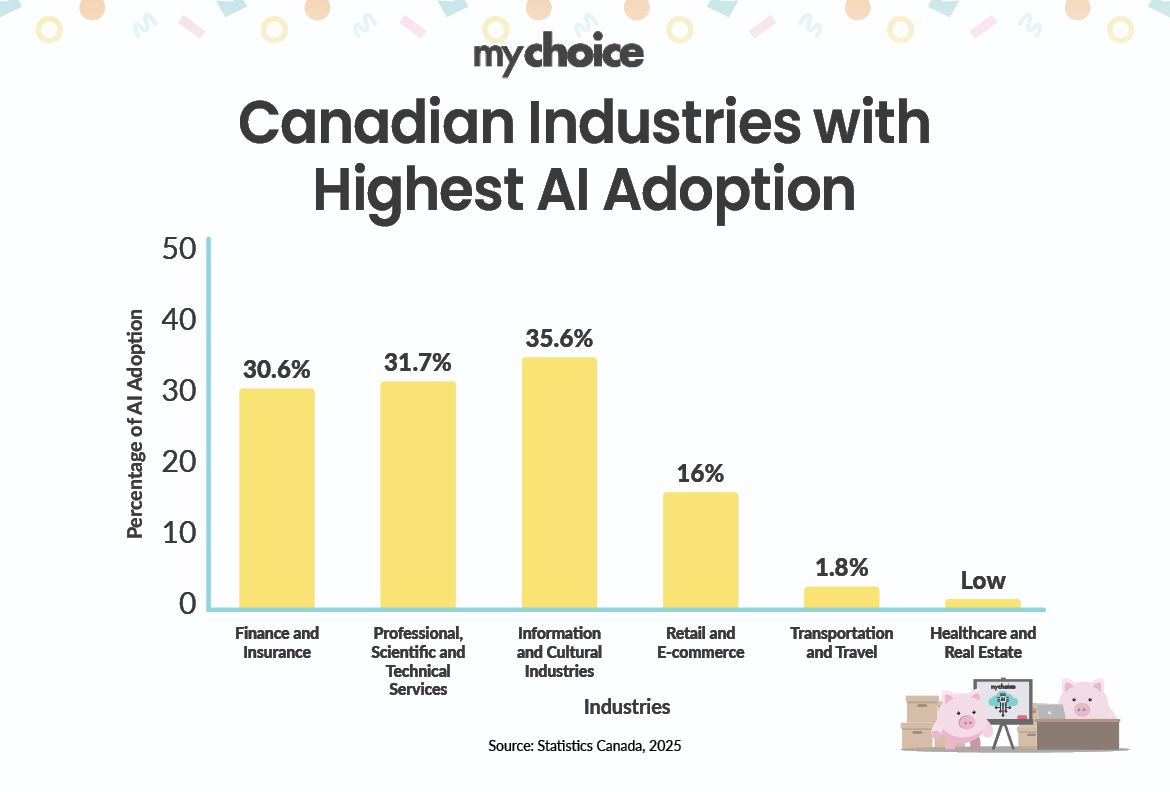

Based on our methodology and research, here’s how Canada’s industries rank in terms of AI-liability exposure from highest to least at risk:

1. Finance and Insurance

According to a June 2025 StatCan study, approximately 30.6% of businesses in this sector report using AI in their services. These industries are both the most AI-dependent and the most heavily monitored, which increases compliance pressure and the risk of penalties if model risks aren’t managed properly.

One example of a clear, forthcoming safeguard is the Office of the Superintendent of Financial Institutions (OSFI) update to Guideline E-23, which explicitly treats AI and ADM systems as part of financial institutions’ “model-risk” portfolio. That means banks and insurers must now maintain a full inventory of all AI models, classify their risk level, and validate them regularly. The new rule takes effect in May 2027 and marks Canada’s first formal requirement for AI governance in the industry.

2. Professional, Scientific, and Technical Services

Approximately 31.7% of firms in this sector now use AI in some form. That includes law, accounting, consulting, engineering, and research firms. AI helps automate analysis, drafting, and client correspondence, and when something goes wrong, the fallout can be costly. A chatbot’s incorrect legal or financial answer could breach duty-of-care standards or spark client disputes.

There’s little AI-specific regulation here yet, but professional ethics fill the gap. For these firms, even one AI mistake can mean a reputational hit far bigger than the time saved by automation.

3. Information and Cultural Industries

Approximately 35.6% of businesses in this sector report using AI, the highest adoption rate of any Canadian industry. From newsrooms and marketing firms to broadcasters and digital publishers, AI has quickly become a tool for applications such as virtual assistants, content-moderation systems, and transcription tools.

However, this means misinformation, biased content, and copyright infringement are now front-line issues. When an AI model disseminates or amplifies false or harmful information, the consequences can be immediate and highly public in a sector where trust is paramount.

One of the most significant early tests of these risks is currently underway. A coalition of Canadian news outlets is suing OpenAI, alleging that it used their copyrighted articles to train ChatGPT without permission or compensation. This is the first Canadian lawsuit to directly challenge the use of copyrighted material in AI training, and could set a major precedent for how intellectual-property law applies to generative AI systems.

4. Retail and E-commerce/Customer Service-Heavy Industries

About 16% of retail businesses plan to adopt AI within the next year. Chatbots, recommendation engines, and automated support tools are fast becoming standard in online shopping and service centres.

But customer-facing AI comes with risk. For example, a chatbot that provides incorrect pricing, return policies, or product information can trigger consumer-protection complaints or even class-action suits. Industry insurers have warned that most policies still don’t cover AI-driven misrepresentations, meaning businesses could face uncovered losses when automation gets it wrong.

5. Transportation and Travel/Customer-Facing Services

AI use in transportation remains low, with just 1.8% of companies reporting adoption in 2025. However, the Air Canada chatbot ruling demonstrated that even limited automation can have significant legal consequences. Customer-service bots that handle fare details, booking changes, or travel policies are high-stakes touchpoints.

6. Health Care, Social Assistance, and Real-Estate/Rental/Leasing

While AI use in these sectors remains lower than in finance or tech, there’s already a trend towards increased adoption. When these industries begin using AI for functions such as triage, medical support, property valuation, or customer interaction, errors or bias could cause significant problems.

Provincial Differences

AI adoption isn’t uniform across Canada. Provinces with technology-intensive economies, such as Ontario, Quebec, and British Columbia, exhibit higher levels of activity in finance, technology services, and media, making them more exposed to AI-related liability. Regions focused on agriculture, resources, and small business have lower adoption for now, and therefore lower short-term risk.

The OPC’s national guidance now influences and is often adopted by provincial privacy regulators in Alberta, British Columbia, and Quebec. For example, Alberta’s privacy commissioner released a 2025 report calling for responsible AI frameworks built on transparency and privacy by design. The result is a patchwork of rules and enforcement across provinces. Now, a company’s AI risk depends not just on what it builds, but where it operates.

What This Means for Canadian Businesses

Given the increasing use of AI models, how do they affect Canadian businesses and the associated risks and safeguards? Let’s take a look at the broader implications:

- AI adoption is uneven, but risk is wide: Even industries with lower adoption, like retail or travel, face significant liability, especially where AI interfaces directly with customers, as seen in the Air Canada case.

- Cyber insurance gaps will get wider: Traditional liability or cyber insurance policies weren’t designed for AI’s unique risks. At present, many businesses may lack protection against AI-specific incidents.

- Regulation and oversight are likely to increase: As more AI-related lawsuits arise from incidents such as data breaches, misrepresentation, or discrimination, regulators will pay closer attention. Businesses could face not only private lawsuits, but also regulatory consequences in the future.

Key Advice from MyChoice

- If you have a business chatbot or automated system that provides information, treat it as if it were a representative of your company. That means proper training, quality checks, audits, and, if needed, clear disclaimers.

- Talk to your insurer about whether your general liability, E&O (errors & omissions), or cyber insurance covers AI risks. If not, consider getting add-on coverage for your business insurance.

- Keep written proof of how you test, monitor, and govern your AI systems. It can reduce cyber insurance premiums and make future claims easier to defend.